Curvature Tensors

This post discusses the concept of curvature in manifolds and various tensor fields which measure this curvature.

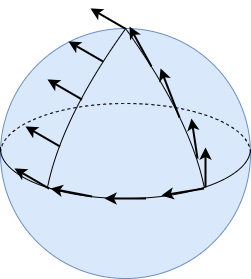

Consider the following figure, which depicts the parallel transport of vectors along a closed loop on a sphere:

If the vector on the bottom right is parallel transported toward the north pole, then toward the equator, then along the equator, returning to its original point, the direction of the vector changes: it was originally pointing "northward", but now is pointing "westward". This change in direction is due to the curvature of the sphere.

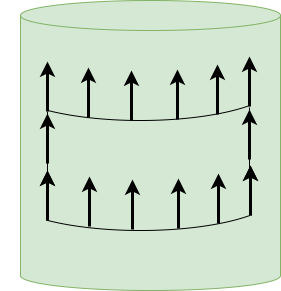

However, when the same process is performed on a cylinder, the resultant vector is the same as the original vector, as in the following figure:

This is because a cylinder is an example of a "flat" manifold; it has no intrinsic curvature, although, when embedded in 3-dimensional space, it can be considered to have extrinsic curvature.

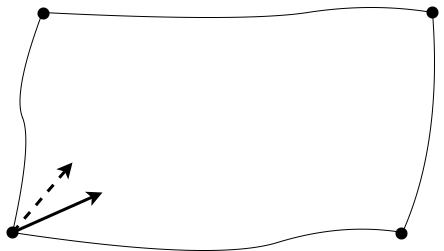

In fact, this observation can be used to define and measure curvature on arbitrary manifolds. The idea is as follows: parallel transport vectors along closed, "rectangular" loops, as in the following figure:

Measure the displacement vector as the difference between the original vector and the transported vector. Next, contract this rectangle, so that it becomes arbitrarily small. Define curvature as an appropriate limit involving the displacement vector.

To make this precise, we need the notion of a parametric family of curves, i.e. we need to be able to trace the outline of each rectangle using curves.

Definition (Parametric Family of Curves). A parametric family of curves is continuous map \(\Gamma : J \times I \rightarrow M\), where \(I, J \subseteq \mathbb{R}\) are intervals and \(M\) is a smooth manifold. The primary curves are the curves \(\Gamma_s : I \rightarrow M\), one for each \(s \in J\), defined as \(\Gamma_s(t) = \Gamma(s,t)\) for every \(t \in I\). The transverse curves are the curves \(\Gamma^{(t)} : J \rightarrow M\), one for each \(t \in I\), defined as \(\Gamma^{(t)}(s) = \Gamma(s,t)\) for every \(s \in J\).

A parametric family of curves thus represents a sort of "grid" of "horizontal" and "vertical" curves that can be overlayed on a manifold. They can be used to "trace" the "rectangles" described above.

Just as we can define vector fields along curves, we can likewise define vector fields along families of curves.

Definition (Vector Field along a Family of Curves). A vector field along a parametric family of curves \(\Gamma : J \times I \rightarrow M\) is a continuous map \(V : J \times I \rightarrow TM\) such that \(V(s,t) \in T_{\Gamma(s,t)}M\) for all \((s,t) \in J \times I\).

We denote the velocity vectors of the primary curves as

\[\partial_s\Gamma(s,t) = (\Gamma_s)'(t) \in T_{\Gamma(s,t)}M\]

and we denote the velocity vectors of the transverse curves as

\[\partial_t\Gamma(s,t) = (\Gamma^{(t)})'(s) \in T_{\Gamma(s,t)}M.\]

Thus, the velocity vectors are examples of vector fields along \(\Gamma\).

We likewise use the following notation:

- \[V_s : I \rightarrow TM, V_s(t) = V(s,t),\]

- \[V^{(t)} : J \rightarrow TM, V_t(s) = V(s,t).\]

Likewise, just as we can define the covariant derivative of a vector field along a curve, we can also define the covariant derivative of a vector field along a parametric family of curves using only a slightly modified definition.

Definition (Covariant Derivative along a Family of Curves). Given a family of curves \(\Gamma : J \times I \rightarrow M\), the primary covariant derivative of a vector field \(V : J \times I \rightarrow TM\) along \(\Gamma\) is defined as

\[D_tV(s,t) = D_tV_s(t),\]

where \(D_tV_s(t)\) is the covariant derivative along the primary curve \(\Gamma_s\), and the transverse covariant derivative of a \(V\) along \(\Gamma\) is defined as

\[D_sV(s,t) = D_sV^{(t)}(s),\]

where \(D_sV^{(t)}(s)\) is the covariant derivative along the transverse curve \(\Gamma^{(t)}\).

Thus, given smooth coordinates \((x^i)\) in a neighborhood of \(\Gamma(s,t)\), the coordinate expressions are as follows:

\begin{align}D_tV(s,t) &= D_tV_s(t) \\&= D_t(V_s^i(t)\partial_i\rvert_{\Gamma_s(t)}) \\&= \dot{V}_s^i(t)\partial_i\rvert_{\Gamma_s(t)} + V_s^i(t)D_t\partial_i\rvert_{\Gamma_s(t)} \\&= \frac{\partial V^i}{\partial t}(s,t)\partial_i\rvert_{\Gamma(s, t)} + V^i(s, t)D_t\partial_i\rvert_{\Gamma(s,t)}.\end{align}

\begin{align}D_sV(s,t) &= D_sV^{(t)}(s) \\&= D_s((V^{(t)})^i\partial_i\rvert_{\Gamma^{(t)}(s)}) \\&= ((V^{(t)})^i)'(s)\partial_i\rvert_{\Gamma^{(t)}(s)} + (V^{(t)})^i(s)D_s\partial_i\rvert_{\Gamma^{(t)}(s)} \\&= \frac{\partial V^i}{\partial s}(s,t)\partial_i\rvert_{\Gamma(s, t)} + V^i(s, t)D_s\partial_i\rvert_{\Gamma(s,t)}.\end{align}

Likewise, we can also define parallel transport for families of curves.

Definition (Parallel Transport along a Family of Curves). Given a Riemannian (or semi-Riemannian) manifold \(M\) and a smooth family of curves \(\Gamma : I \times I \rightarrow M\) defined on an open interval \(I \subseteq \mathbb{R}\) such that \(0 \in I\), the parallel transport along the primary curve \(\Gamma_s\) from time \(t_1\) to time \(t_2\) is defined as

\[P_{s_1,t_1}^{s_1,t_2} = P_{t_1,t_2}^{\Gamma_{s_1}} : T_{\Gamma(s_1,t_1)}M \rightarrow T_{\Gamma(s_2,t_2)}M.\]

Likewise, the parallel transport along the transverse curve \(\Gamma^{(t)}\) from time \(s_1\) to time \(s_2\) is defined as

\[P_{s_1,t_1}^{s_2,t_1} = P_{s_1,s_2}^{\Gamma^{(t_1)}} : T_{\Gamma(s_1,t_1)}M \rightarrow T_{\Gamma(s_2,t_1)}M.\]

We are now prepared to define the curvature measure proposed in the introduction.

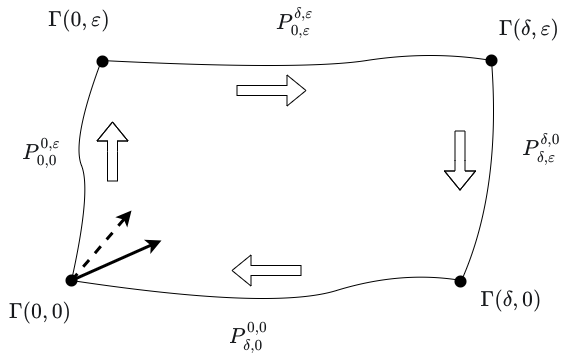

Definition (Displacement). Given a Riemannian (or semi-Riemannian) manifold \(M\) and a smooth family of curves \(\Gamma : I \times I \rightarrow M\) defined on an open interval \(I \subseteq \mathbb{R}\) such that \(0 \in I\), the displacement along \(\Gamma\) at the point \(p = \Gamma(0,0)\) is defined for each \(z \in T_pM\) as follows:

\[\mathcal{D}_p(z) = \lim_{\delta,\varepsilon \to 0}\frac{P_{\delta,0}^{0,0} \circ P_{\delta,\varepsilon}^{\delta,0} \circ P_{0,\varepsilon}^{\delta,\varepsilon} \circ P_{0,0}^{0,\varepsilon} (z) - z}{\delta\varepsilon}.\]

The displacement is the limiting ratio of the displacement vector resulting from parallel transport around the "rectangular" loop and the area \(\delta\varepsilon\) of the rectangular interval \((0, \delta) \times (0,\varepsilon)\) in the domain of \(\Gamma\).

The limit \(\lim_{x,y \to a}f(x,y) = L\) is interpreted as follows: for all \(\varepsilon \gt 0\), there exists a \(\delta \gt 0\) such that \(\lVert f(x,y)-L \rVert \lt \varepsilon\) whenever both \(\lVert x-a \rVert \lt \delta\) and \(\lVert y-a \rVert \lt \delta\). This is equivalent to \(\lim_{x \to a}\lim_{y \to a}f(x,y)\).

This process is depicted in the following figure:

Let \(Z\) denote the vector field along \(\Gamma\) defined by first parallel transporting the tangent vector \(Z(0,0) = z\) "vertically" (according to the figure) along the primary curve \(\Gamma_0\) and then, for each \(t\), parallel transporting "horizontally" (according to the figure) along the transverse curve \(\Gamma^{(t)}\). Then, \(Z\) represents the process of transporting a vector \(z\) around the closed, rectangular loop as depicted in the figure. \(Z\) can thus be defined as follows:

\[Z(s,t) = P_{0,t}^{s,t}(P_{0,0}^{0,t}(z)).\]

This process is analogous to a derivative, and indeed bears a resemblance to the formula for the covariant derivative of a vector field \(V\) along a curve \(\gamma\) in terms of parallel transport:

\[D_tV(t_0) = \lim_{t_1 \to 0}\frac{P^{\gamma}_{t,0}V(t_0 + t_1) - V(t_0)}{t_1}.\]

This formula then implies that

\[D_sD_tZ(0,0) = \lim_{\delta \to 0}\frac{P_{\delta,0}^{0,0}(D_tZ(\delta,0)) - D_tZ(0,0)}{\delta}.\]

Furthermore, the same formula implies that

\[D_tZ(\delta,0) = \lim_{\varepsilon \to 0}\frac{P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon)) - Z(\delta,0)}{\varepsilon}\]

and

\[D_tZ(0,0) = \lim_{\varepsilon \to 0}\frac{P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)) - Z(0,0)}{\varepsilon}.\]

Now, recall that limits in finite-dimensional normed vector spaces are computed component-wise, meaning that \(\lim_{h \to a}f(h) = (\lim_{h \to a}f^j(h)) \cdot e_j\) for any basis \((e_j\)). It thus follows that, for any linear map \(A : V \rightarrow W\) between finite-dimensional normed vector spaces \(V\) and \(W\) and any basis \((e_j)\) for \(V\),

\begin{align}A(\lim_{h \to a}f(h)) &= A((\lim_{h \to a}f^j(h)) \cdot e_j) \\&= (\lim_{h \to a}f^j(h)) \cdot A(e_j) \\&= \lim_{h \to a}(f^j(h) \cdot A(e_j)) \\&= \lim_{h \to a}A(f^j(h) \cdot e_j) \\&=\lim_{h \to a}A(f(h)).\end{align}

Thus, limits "commute" with the application of linear maps. Since parallel transport is a linear map, it follows that

\begin{align}P_{\delta,0}^{0,0}(D_tZ(\delta,0)) &= P_{\delta,0}^{0,0}\left(\lim_{\varepsilon \to 0}\frac{P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon)) - Z(\delta,0)}{\varepsilon}\right) \\&= \lim_{\varepsilon \to 0} P_{\delta,0}^{0,0}\left(\frac{P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon)) - Z(\delta,0)}{\varepsilon}\right) \\&= \lim_{\varepsilon \to 0} \frac{P_{\delta,0}^{0,0}(P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon))) - P_{\delta,0}^{0,0}(Z(\delta,0))}{\varepsilon} .\end{align}

Then, substituting, we obtain

\begin{align}D_sD_tZ(0,0) &= \lim_{\delta \to 0}\frac{\left[\lim_{\varepsilon \to 0} \frac{P_{\delta,0}^{0,0}(P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon))) - P_{\delta,0}^{0,0}(Z(\delta,0))}{\varepsilon} \right] - \left[\lim_{\varepsilon \to 0}\frac{P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)) - Z(0,0)}{\varepsilon}\right]}{\delta} \\&= \lim_{\delta \to 0}\frac{\lim_{\varepsilon \to 0} \left[\frac{P_{\delta,0}^{0,0}(P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon))) - P_{\delta,0}^{0,0}(Z(\delta,0))}{\varepsilon} - \frac{P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)) - Z(0,0)}{\varepsilon}\right]}{\delta} \\&= \lim_{\delta \to 0}\lim_{\varepsilon \to 0}\frac{ \left[\frac{P_{\delta,0}^{0,0}(P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon))) - P_{\delta,0}^{0,0}(Z(\delta,0))}{\varepsilon} - \frac{P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)) - Z(0,0)}{\varepsilon}\right]}{\delta} \\&= \lim_{\delta,\varepsilon \to 0}\frac{P_{\delta,0}^{0,0}(P_{\delta,\varepsilon}^{\delta,0}(Z(\delta,\varepsilon))) - P_{\delta,0}^{0,0}(Z(\delta,0)) - P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)) + Z(0,0)}{\delta\varepsilon}.\end{align}

Next, recall that the parallel transport map is a linear isomorphism, whose inverse is parallel transport along the inverse curve (i.e. curve going "in the opposite direction" with the upper and lower indices swapped). Also note that \(Z\) is parallel along \(\Gamma_0\) and along \(\Gamma^{(t)}\) for every \(t\) by construction, and hence the result of parallel transport along such curves to an index is the same as the value of \(Z\) at the respective index. It then follows that

\begin{align}z &= Z(0,0) \\&= P_{0,\varepsilon}^{0,0}(P_{0,0}^{0,\varepsilon}(Z(0,0))) \\&= P_{0,\varepsilon}^{0,0}(Z(0,\varepsilon)),\end{align}

and likewise

\begin{align}z &= Z(0,0) \\&= P_{0,\varepsilon}^{\delta,\varepsilon}(P_{\delta,\varepsilon}^{0,\varepsilon}(Z(0,0))) \\&= P_{0,\varepsilon}^{\delta,\varepsilon}(Z(0,\varepsilon)).\end{align}

It also follows that

\begin{align}Z(\delta,\varepsilon) &= P_{0,\varepsilon}^{\delta,\varepsilon}(Z(0,\varepsilon)) \\&= P_{0,\varepsilon}^{\delta,\varepsilon}(P_{0,0}^{0,\varepsilon}(z)).\end{align}

If we substitute this into the limit obtained previously, we obtain

\[D_sD_tZ(0,0) = \lim_{\delta,\varepsilon \to 0}\frac{P_{\delta,0}^{0,0} \circ P_{\delta,\varepsilon}^{\delta,0} \circ P_{0,\varepsilon}^{\delta,\varepsilon} \circ P_{0,0}^{0,\varepsilon} (z) - z}{\delta\varepsilon}.\]

Thus, we have obtained an equivalent expression for displacement:

\[\mathcal{D}_p(z) = D_sD_tZ(0,0),\]

where \(\Gamma\) is an appropriately defined family of curves, \(Z\) is an appropriately defined vector field along \(\Gamma\), and \(Z(0,0) = z\).

Definition (Displacement [Alternative]). Given a Riemannian (or semi-Riemannian) manifold \(M\) and a smooth family of curves \(\Gamma : I \times I \rightarrow M\) defined on an open interval \(I \subseteq \mathbb{R}\) such that \(0 \in I\), the displacement along \(\Gamma\) at the point \(p = \Gamma(0,0)\) is defined for every tangent vector \(z \in T_pM\) as

\[\mathcal{D}_p(z) = D_sD_tZ(0,0),\]

where \(Z\) is the vector field along \(\Gamma\) defined as

\[Z(s,t) = P_{0,t}^{s,t}(P_{0,0}^{0,t}(z)).\]

Although this is a useful and intuitive characterization of curvature, it is not yet complete since it is restricted to vector fields along families of curves. Instead, we seek a characterization of curvature which is applicable to arbitrary vector fields. The displacement operation indicates that curvature is somehow related to second covariant derivatives.

It is often instructive to consider the special case of the Euclidean connection \(\bar{\nabla}\). Recall that the Euclidean connection can be expressed in terms of smooth coordinates as

\[\bar{\nabla}_XY = X(Y^i)\partial_i.\]

We then compute the following in smooth coordinates (noting that \(\bar{\nabla}_X\partial_i = 0\) for any \(X\) in the Euclidean connnection):

\begin{align}\bar{\nabla}_X\bar{\nabla}_YZ &= \bar{\nabla}_X(Y(Z^i)\partial_i) \\&= Y(Z^i)\bar{\nabla}_X\partial_i + X(Y(Z^i))\partial_i & \text{(Product rule)}\\&= X(Y(Z^i))\partial_i.\end{align}

Likewise, transposing \(X\) and \(Y\), it follows that

\[\bar{\nabla}_Y\bar{\nabla}_XZ = Y(X(Z^i))\partial_i.\]

Again, it also follows that

\begin{align}\bar{\nabla}_{[X,Y]}Z &= [X,Y](Z^i)\partial_i \\&= (XY - YX)(Z^i)\partial_i \\&= X(Y(Z^i))\partial_i - Y(X(Z^i))\partial_i \\&= \bar{\nabla}_X\bar{\nabla}_YZ - \bar{\nabla}_Y\bar{\nabla}_XZ.\end{align}

Thus, for the Euclidean connection,

\[\bar{\nabla}_X\bar{\nabla}_YZ - \bar{\nabla}_Y\bar{\nabla}_XZ - \bar{\nabla}_{[X,Y]}Z = 0.\]

Therefore, for the Euclidean connection, it is not sufficient to consider \(\bar{\nabla}_X\bar{\nabla}_YZ\) alone, but the additional terms in the expression above must also be considered, and, in particular, they obey the equation above.

We therefore generalize this formula and propose the following characterization of curvature for the Levi-Civita connection on arbitrary Riemannian (or semi-Riemannian) manifolds.

Definition (Riemann Curvature Endomorphism). For any Riemannian (or semi-Riemannian) manifold \(M\) and smooth vector fields \(X\) and \(Y\) on \(M\), the (Riemann) curvature endomorphism determined by \(X\) and \(Y\) is the map \(R(X,Y) : \mathfrak{X}(M) \rightarrow \mathfrak{X}(M)\), where \(R : \mathfrak{X}(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \rightarrow \mathfrak{X}(M)\) defined for all \(Z \in \mathfrak{X}(M)\) as

\[R(X,Y)Z = \nabla_X\nabla_YZ - \nabla_Y\nabla_XZ - \nabla_{[X,Y]}Z.\]

Thus, for the Euclidean connection, \(R(X,Y)Z = 0\) for all vector fields \(X\),\(Y\), and \(Z\).

The following theorem holds (but its proof would require a detour, so it is omitted):

Theorem. A Riemannian (or semi-Riemannian) manifold is flat if and only if its curvature tensor vanishes identically.

Thus, the relation above holds for all flat manifolds (i.e. those locally isometric to Euclidean space). This verifies that the curvature endomorphism characterizes flatness, and likewise provides a concrete measure of the deviation from flatness for non-flat manifolds.

Next, we want to confirm that the Riemann curvature endomorphism is multilinear over \(C^{\infty}(M)\), which will allows us to derive a corresponding tensor field from this endomorphism.

First, note that

\begin{align}R(fX,Y)Z &= \nabla_{fX}\nabla_YZ - \nabla_Y\nabla_{fX}Z - \nabla_{[fX,Y]}Z \\&= f\nabla_{X}\nabla_YZ - \nabla_Y(f\nabla_{X}Z) - \nabla_{(fXY - Y(fX))}Z \\&= f\nabla_{X}\nabla_YZ - f\nabla_Y\nabla_XZ - Yf\nabla_XZ - \nabla_{(fXY - fYX - YfX)}Z \\&= f\nabla_{X}\nabla_YZ - f\nabla_Y\nabla_{X}Z - Yf\nabla_XZ - \nabla_{(f[X,Y] - YfX)}Z \\&= f\nabla_{X}\nabla_YZ - f\nabla_Y\nabla_{X}Z - Yf\nabla_XZ -f\nabla_{[X,Y]}Z + Yf\nabla_{X}Z \\&= f\nabla_{X}\nabla_YZ - f\nabla_Y\nabla_{X}Z -f\nabla_{[X,Y]}Z \\&= fR(X,Y)Z.\end{align}

A similar calculation shows that \(R(X,fY) = fR(X,Y)Z\). Finally, note that

\begin{align}R(X,Y)(fZ) &= \nabla_X\nabla_Y(fZ) - \nabla_Y\nabla_X(fZ) - \nabla_{[X,Y]}(fZ) \\&= \nabla_X(f\nabla_YZ + YfZ) - \nabla_Y(f\nabla_XZ + XfZ) - (f\nabla_{[X,Y]}Z + [X,Y]fZ) \\&= \nabla_X(f\nabla_YZ) + \nabla_X(YfZ) - \nabla_Y(f\nabla_XZ) - \nabla_Y(XfZ) - f\nabla_{[X,Y]}Z - [X,Y]fZ \\&= (f\nabla_X\nabla_YZ + Xf\nabla_YZ) + (Yf\nabla_XZ + XYfZ) - (f\nabla_Y\nabla_XZ + Yf\nabla_XZ) - (Xf\nabla_YZ + YXfZ) - f\nabla_{[X,Y]}Z - (XYfZ - YXfZ) \\&= f\nabla_X\nabla_YZ - f\nabla_Y\nabla_XZ - f\nabla_{[X,Y]}Z \\&= fR(X,Y)Z.\end{align}

Tensors and Multilinear Maps

Tensor fields of type \((k+1,l)\) can be characterized by maps that are multilinear over \(C^{\infty}(M)\), either with signature

\[\underbrace{\mathfrak{X}^*(M) \times \dots \times \mathfrak{X}^*(M)}_{k+1~ \text{times}} \times \underbrace{\mathfrak{X}(M) \dots \times \mathfrak{X}(M)}_{l ~\text{times}} \rightarrow C^{\infty}(M)\]

or with signature

\[\underbrace{\mathfrak{X}^*(M) \times \dots \times \mathfrak{X}^*(M)}_{k~ \text{times}} \times \underbrace{\mathfrak{X}(M) \dots \times \mathfrak{X}(M)}_{l ~\text{times}} \rightarrow \mathfrak{X}(M).\]

In this section we will review these results, which are crucial for formulating a curvature tensor based on the curvature endomorphism.

Linear Maps

For any vector spaces \(V\) and \(W\),

\[[V,W] \cong L(W^*, V;\mathbb{R}),\]

where \([V,W]\) denotes the vector space of linear maps with signature \(V \rightarrow W\) and \(L(W^*,V;\mathbb{R})\) denotes the vector space of multilinear maps with signature \(W^* \times V \rightarrow \mathbb{R}\).

This isomorphism is witnessed by the following map:

\[\varphi(A)(\omega, v) = \omega(Av).\]

This is indeed an isomorphism, since it has a trivial kernel. Given a basis \((E^W_i)\) for \(W\) with corresponding dual basis \((\varepsilon_W^j)\), the inverse mapping can be defined explicitly:

\[\varphi^{-1}(L)(v) = L(\varepsilon_W^i, v) \cdot E^W_i,\]

We then compute

\begin{align}\varphi(\varphi^{-1}(L))(\omega,v) &= \omega(L(\varepsilon_W^i, v) \cdot E^W_i) \\&= L(\varepsilon_W^i, v) \cdot \omega(E^W_i) \\&= \omega^i \cdot L(\varepsilon_W^i, v) \\&= L(\omega^i \cdot \varepsilon_W^i, v) \\&= L(\omega, v).\end{align}

Likewise, we also compute

\begin{align}\varphi^{-1}(\varphi(A))(v) &= \varepsilon_W^i(Av) \cdot E^W_i \\&= [Av]^i \cdot E^W_i \\&= Av.\end{align}

If \(\varphi^{-1}(L) = A\), then it follows that

\begin{align}A(E^V_j) &= \varphi^{-1}(L)(E^V_j) \\&= L(\varepsilon^i_W, E^V_j) \cdot E^W_i \\&= L^i_j \cdot E^W_i,\end{align}

where \(L^i_j\) are the components of the tensor, and likewise if \(\varphi(A) = L\), then it follows that

\begin{align}L^i_j &= L(\varepsilon_W^i, E^V_j) \\&= \varphi(A)(\varepsilon_W^i, E^V_j) \\&= \varepsilon_W^i(A(E^V_j)) \\&= [A(E^V_j)]^i.\end{align}

Thus, if only \(A\) is known, the tensor components \(L^i_j\) can be computed in this manner.

Multilinear Maps

Moreover, for any vector spaces \(V_1,\dots,V_k\) and \(W\),

\[L(V_1,\dots,V_k; W) \cong L(W^*, V_1,\dots,V_k;\mathbb{R}).\]

This isomorphism is witnessed by the following map:

\[\varphi(A)(\omega, v_1,\dots,v_k) = \omega(A(v_1,\dots,v_k)).\]

This is indeed an isomorphism, since it has a trivial kernel. Given a basis \((E^W_i)\) for \(W\) with corresponding dual basis \((\varepsilon_W^i)\), the inverse mapping can be defined explicitly:

\[\varphi^{-1}(L)(v_1,\dots,v_k) = L(\varepsilon_W^i, v_1,\dots,v_k) \cdot E^W_i.\]

Multilinear Maps on a Vector Space

This can be restricted to multilinear maps "on" a vector space \(V\), i.e. either covariant maps

\[L(\underbrace{V,\dots,V}_{k~\text{times}};V) \cong L(V^*, \underbrace{V,\dots,V}_{k~\text{times}};\mathbb{R})\]

as witnessed by the map

\[\varphi(A)(\omega, v_1,\dots,v_k) = \omega(A(v_1,\dots,v_k)),\]

or contravariant maps

\[L(\underbrace{V^*,\dots,V^*}_{k~\text{times}};V) \cong L(\underbrace{V^*,\dots,V^*}_{k+1~\text{times}};\mathbb{R})\]

as witnessed by the map

\[\varphi(A)(\omega^1,\dots,\omega^{k+1}) = \omega^{k+1}(A(\omega^1, \dots, \omega^k)),\]

or maps of mixed variance

\[ L(\underbrace{V^*,\dots,V^*}_{k~\text{times}},\underbrace{V,\dots,V}_{l~\text{times}};V) \cong L(\underbrace{V^*,\dots,V^*}_{k+1~\text{times}},\underbrace{V,\dots,V}_{l~\text{times}};\mathbb{R})\]

as witnessed by the map

\[\varphi(A)(\omega^1,\dots,\omega^{k+1},v_1,\dots,v_l) = \omega^{k+1}(A(\omega^1,\dots,\omega^k, v_1,\dots,v_l)).\]

This is indeed an isomorphism, since it has a trivial kernel. Given a basis \((E^W_i)\) for \(W\) with corresponding dual basis \((\varepsilon_W^i)\), the inverse mapping can be defined explicitly:

\[\varphi^{-1}(L)(\omega^1,\dots,\omega^k, v_1,\dots,v_l) = L(\omega^1,\dots,\omega^k, \varepsilon_W^{i_{k+1}}, v_1,\dots,v_l) \cdot E^W_{i_{k+1}}.\]

If \(\varphi^{-1}(L) = A\), then it follows that

\begin{align}A(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_k},E_{j_1},\dots,E_{j_l}) &= \varphi^{-1}(L)(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_k},E_{j_1},\dots,E_{j_l}) \\&= L(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_{k+1}},E_{j_1},\dots,E_{j_l}) \cdot E^W_{i_{k+1}} \\&= L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_l} \cdot E^W_{i_{k+1}},\end{align}

and likewise if \(\varphi(A) = L\), then it follows that

\begin{align}L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_l} &= L(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_{k+1}},E_{j_1},\dots,E_{j_l}) \\&= \varphi(A)(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_{k+1}},E_{j_1},\dots,E_{j_l}) \\&= \varepsilon_W^{i_{k+1}}(A(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_k},E_{j_1},\dots,E_{j_l})) \cdot E^W_{i_{k+1}}\\&= [A(\varepsilon_W^{i_1},\dots,\varepsilon_W^{i_k},E_{j_1},\dots,E_{j_l})]^{i_{k+1}} \cdot E^W_{i_{k+1}}.\end{align}

Thus, if only \(A\) is known, the tensor components \(L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_k}\) can be computed in this manner.

The Characterization Lemma

Given any tensor field \(F : M \rightarrow T^{(k,l)}TM\), smooth covector fields \(\omega^1,\dots,\omega^k\), and smooth vector fields \(X_1,\dots,X_l\), it is possible to define a corresponding function \(\tilde{F}(\omega^1,\dots,\omega^k,X_1,\dots,X_l) : U \rightarrow \mathbb{R}\) on any open subset \(U \subseteq M\) on which each of the covector and vector fields are defined, as follows:

\[\tilde{F}(\omega^1,\dots,\omega^k,X_1,\dots,X_l)(p) = F_p(\omega^1_p,\dots,\omega^k_p,X_1\rvert_p,\dots,X_l\rvert_p).\]

It can be shown that this function is smooth. Thus, \(\tilde{F}\) represents a map with signature

\[\tilde{F} : \underbrace{\mathfrak{X}^*(M) \times \dots \times \mathfrak{X}^*(M)}_{k~ \text{times}} \times \underbrace{\mathfrak{X}(M) \dots \times \mathfrak{X}(M)}_{l ~\text{times}} \rightarrow C^{\infty}(M).\]

This map is multilinear over \(C^{\infty}(M)\). Moreover, it can be shown that any multilinear map over \(C^{\infty}(M)\) with this signature induces a corresponding tensor field.

Then, given any multilinear map \(A\) with signature

\[A : \underbrace{\mathfrak{X}^*(M) \times \dots \times \mathfrak{X}^*(M)}_{k~ \text{times}} \times \underbrace{\mathfrak{X}(M) \dots \times \mathfrak{X}(M)}_{l ~\text{times}} \rightarrow \mathfrak{X}(M),\]

such a map induces a multilinear map \(\varphi(A)\) with signature

\[\varphi(A) : \underbrace{\mathfrak{X}^*(M) \times \dots \times \mathfrak{X}^*(M)}_{k+1~ \text{times}} \times \underbrace{\mathfrak{X}(M) \dots \times \mathfrak{X}(M)}_{l ~\text{times}} \rightarrow C^{\infty}(M)\]

defined as

\[\varphi(A)(\omega^1,\dots,\omega^{k+1},X_1,\dots,X_l) = \omega^{k+1}(A(\omega^1,\dots,\omega^k,X_1,\dots,X_l)).\]

This map is a isomorphism since it has a trivial kernel. Given a coordinate frame \((\partial_j)\) with corresponding dual frame \((dx^j)\), the inverse mapping can be defined explicitly:

\[\varphi^{-1}(L)(\omega^1,\dots,\omega^k, X_1,\dots,X_l) = L(\omega^1,\dots,\omega^k,dx^{i_{k+1}},\partial_{j_1},\dots,\partial_{j_l}) \cdot \partial_{i_{k+1}}.\]

If \(\varphi^{-1}(L) = A\), then it follows that

\begin{align}A(dx^{i_1},\dots,dx^{i_k},\partial_{j_1},\dots,\partial_{j_l}) &= \varphi^{-1}(L)(dx^{i_1},\dots,dx^{i_k},\partial_{j_1},\dots,\partial_{j_l}) \\&= L(dx^{i_1},\dots,dx^{i_{k+1}},\partial_{j_1},\dots,\partial_{j_l}) \cdot \partial_{i_{k+1}} \\&= L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_l} \cdot \partial_{i_{k+1}},\end{align}

and if \(\varphi(A) = L\), then it follows that

\begin{align}L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_l} &= L(dx^{i_1},\dots,dx^{i_{k+1}},\partial_{j_1},\dots,\partial_{j_l}) \\&= \varphi(A)(dx^{i_1},\dots,dx^{i_{k+1}},\partial_{j_1},\dots,\partial_{j_l}) \\&= dx^{i_{k+1}}(A(dx^{i_1},\dots,dx^{i_k},\partial_{j_1},\dots,\partial_{j_l})) \\&= [A(dx^{i_1},\dots,dx^{i_k},\partial_{j_1},\dots,\partial_{j_l})]^{i_{k+1}}.\end{align}

Thus, if only \(A\) is known, the tensor components \(L^{i_1,\dots,i_{k+1}}_{j_1,\dots,j_l}\) can be computed in this manner.

Thus, since such multilinear maps induce tensor fields, they are often conflated together with tensor fields, and referred to as "tensors" themselves.

The Curvature Tensor

The curvature map has signature

\[R : \mathfrak{X}(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \rightarrow \mathfrak{X}(M).\]

As indicated in the previous section, there is a corresponding map

\[\varphi(R) : \mathfrak{X}^*(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \rightarrow C^{\infty}(M)\]

defined as

\[\varphi(R)(\omega,X,Y,Z) = \omega(R(X,Y,Z)).\]

Thus, as indicated in the previous section, there exists a corresponding tensor field \(F = \varphi(R)\).

Note that we will adopt the convention that the final index is the contravariant index of the corresponding tensor.

Given a coordinate frame \((\partial_j)\) with corresponding dual frame \((dx^i)\), an inverse mapping can be computed explicitly as

\[\varphi^{-1}(F)(X,Y,Z) = F(X,Y,Z,dx^i) \cdot \partial_i.\]

Note that \(dx^i\) appeared as the last argument, since we are adopting a different convention regarding the ordering of indices. We can now compute the components of this tensor \(F\); if \(\varphi^{-1}(F) = R\), then

\begin{align}R(\partial_i, \partial_j, \partial_k) &= \varphi^{-1}(F)(\partial_i, \partial_j, \partial_k) \\&= F(\partial_i, \partial_j, \partial_k, dx^l) \cdot \partial_l \\&= F_{ijk}^l \cdot \partial_l,\end{align}

and likewise if \(\varphi(R) = F\), then

\begin{align}F_{ijk}^l &= F(\partial_i, \partial_j, \partial_k, dx^l) \\&= \varphi(R)(\partial_i, \partial_j, \partial_k, dx^l) \\&= dx^l(R(X,Y)Z) \\&= [R(X,Y)Z]^l.\end{align}

Note that we use the same notation \(R\) to refer to the tensor \(F\), so that

\[R_{ijk}^l \cdot \partial_l = R(\partial_i, \partial_j, \partial_k),\]

or, equivalently,

\[R_{ijk}^l = dx^l(R(\partial_i, \partial_j, \partial_k)).\]

Thus, this tensor can be written in terms of its components as

\[R = R_{ijk}^l \cdot dx^i \otimes dx^j \otimes dx^k \otimes \partial_l.\]

We refer to this tensor field as the \(\mathbf{(1,3)}\)-curvature tensor.

Next, we will compute the coordinate expression for this tensor. Recall that the coordinate expression for the covariant derivative is

\[\nabla_XY = X(Y^j)\partial_j + X^iY^j\Gamma_{ij}^k \partial_k.\]

Note that

\[\nabla_{\partial_j}\partial_k = \Gamma_{jk}^l \partial_l,\]

\[\nabla_{\partial_i}\partial_k = \Gamma_{ik}^l \partial_l,\]

and

\[\nabla_{[\partial_i,\partial_j]}\partial_k = 0.\]

Then, we compute

\begin{align}R(\partial_i, \partial_j, \partial_k) &= \nabla_{\partial_i}\nabla_{\partial_j}\partial_k - \nabla_{\partial j}\nabla_{\partial_i}\partial_k - \nabla_[\partial_i,\partial_j]\partial_k \\&= \nabla_{\partial_i}(\Gamma_{jk}^l\partial_l) - \nabla_{\partial_j}(\Gamma_{ik}^l\partial_l) \\&= \Gamma_{jk}^l\nabla_{\partial_i}\partial_l + \partial_i\Gamma_{jk}^l\partial_l - (\Gamma_{ik}\nabla_{\partial_j}\partial_l + \partial_j\Gamma_{ik}^l\partial_l) \\&= \Gamma_{jk}^l\nabla_{\partial_i}\partial_l + \partial_i\Gamma_{jk}^l\partial_l - \Gamma_{ik}\nabla_{\partial_j}\partial_l - \partial_j\Gamma_{ik}^l\partial_l \\&= \Gamma_{jk}^l\Gamma_{il}^m\partial_m + \partial_i\Gamma_{jk}^l\partial_l - \Gamma_{ik}\Gamma_{jl}^m\partial_m - \partial_j\Gamma_{ik}^l\partial_l \\&= \Gamma_{jk}^m\Gamma_{im}^l\partial_l + \partial_i\Gamma_{jk}^l\partial_l - \Gamma_{ik}^m\Gamma_{jm}^l\partial_l - \partial_j\Gamma_{ik}^l\partial_l & \text{(transposing dummy indices \(m\) and \(l\))} \\&= (\partial_i\Gamma_{jk}^l - \partial_j\Gamma_{ik}^l+ \Gamma_{jk}^m\Gamma_{im}^l - \Gamma_{ik}^m\Gamma_{jm}^l)\partial_l. \end{align}

It thus follows that

\[R_{ijk}^l = \partial_i\Gamma_{jk}^l - \partial_j\Gamma_{ik}^l+ \Gamma_{jk}^m\Gamma_{im}^l - \Gamma_{ik}^m\Gamma_{jm}^l.\]

It is often more convenient to work with a covariant tensor rather than a tensor of mixed variance. Thus, we define a map

\[\mathrm{Rm} : \mathfrak{X}(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \times \mathfrak{X}(M) \rightarrow C^{\infty}(M)\]

called the Riemann curvature tensor, as

\[\mathrm{Rm}(X,Y,Z,W) = \langle R(X,Y,Z),W \rangle_g,\]

where \(\langle -,-\rangle_g\) denotes the metric.

This map represents a \((0,4)\) tensor field by the characterization lemma. This is obtained from the \((1,3)\)-curvature tensor by lowering the final index, i.e.

\[R_{ijkl} = g_{lm}R_{ijk}^l.\]

This covariant tensor is thus expressed in coordinates as

\[R_{ijkl} = g_{lm}(\partial_i\Gamma_{jk}^m - \partial_j\Gamma_{ik}^m + \Gamma_{jk}^p\Gamma_{ip}^l - \Gamma_{ik}^p\Gamma_{jp}^m),\]

where we have renamed index \(l\) to \(m\) and index \(m\) to \(p\). Thus, this tensor has coordinate expression

\[R_{ijkl}dx^i \otimes dx^j \otimes dx^k \otimes dx^l.\]

The Ricci Curvature Tensor

The Ricci curvature tensor \(\mathrm{Rc}\) is the trace (contraction) of the Riemann curvature tensor on the first and last indices, and thus has components \(R_{ij}\) defined as

\[R_{ij} = g^{km}R_{kijm}.\]

The Ricci curvature can be given a geometric interpretation in terms of sectional curvature.

The Scalar Curvature Function

The scalar curvature is a function (or a \((0,0)\)-tensor) which is the contraction of the Ricci curvature tensor. It is thus defined as

\[S = g^{ij}R_{ij} = g^{ij}g^{km}R_{kijm}.\]

The scalar curvature can likewise be given a geometric interpretation in terms of sectional curvature.

The Ricci and scalar curvature are useful since the Riemann curvature tensor is such a complicated construct. They summarize key information about curvature while being simpler to employ.